Lesson 2: The Context Challenge: Why LLMs Need MCP

The Isolated Genius Problem

Imagine a brilliant student taking an exam in a sealed room with no textbooks, notes, or internet access. Despite their intelligence, they can only work with what they've memorized. This perfectly describes how most Large Language Models operate today; they're powerful but isolated from the rich, real-time data that exists in your company's systems.

This isolation creates serious problems. When an AI assistant can't access your latest project documents, current database records, or updated policies, it either provides generic answers, or worse, confidently incorrect information known as "hallucinations." For example, Air Canada's chatbot famously invented a refund policy that didn't exist, resulting in legal consequences. A similar incident occurred in California, when two law firms were fined $31,000 by a judge because their case brief contained AI-generated references to legal citations that didn't actually exist.

The important thing to understand here is that the AI lacked access to actual policy data and improvised instead. If you want to reap the productivity benefits of AI and avoid these pitfalls, keep reading to learn about MCPs.

Why Context Matters More Than Raw Intelligence

Even GPT-4 and Claude, despite their remarkable capabilities, face fundamental limitations without structured access to external information:

Fixed Knowledge Cutoffs: Most models have training data that ends months or years ago. Ask about recent events or newly created company policies, and they simply don't know. If you press these models, the results are even worse; they guess based on outdated information and pass it off as fact.

Limited Context Windows: Every model can only process a finite amount of text at once (typically 6,000-32,000 words). This means you can't simply include all potentially relevant information in your prompt; if your conversation goes on for too long, the context you provided at the beginning of the conversation can be forgotten.

No Built-in Data Fetching: Out of the box, LLMs can't query databases, call APIs, or read files. They only know what's in their training data and current prompt. Data that isn't structured correctly won't be acknowledged, which may necessitate more time and effort formatting your data in order to get the results you're looking for.

The Integration Nightmare

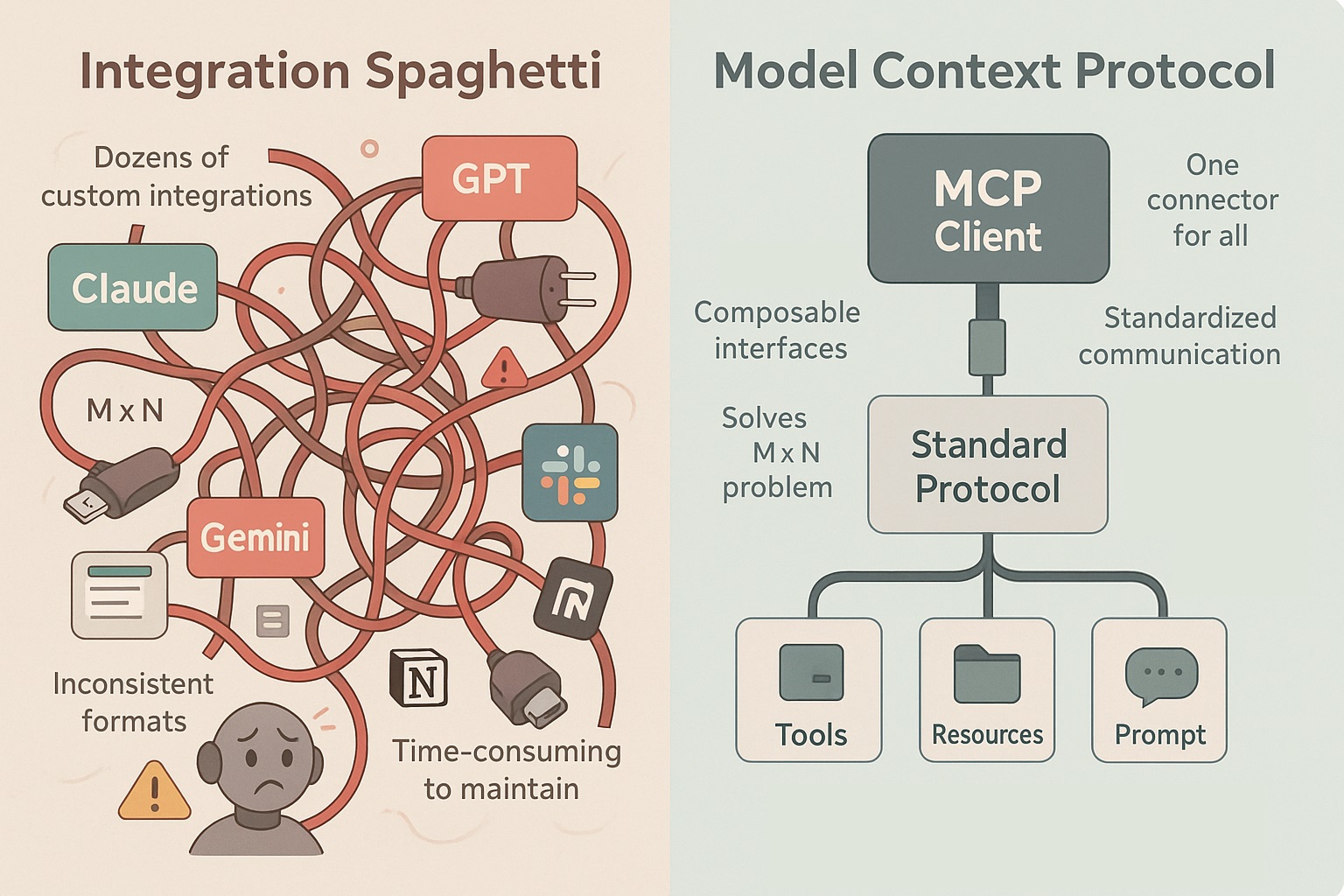

If you've tried connecting AI models to real-world data sources, you've likely encountered what developers call "integration spaghetti." This is a tangled mess of custom connectors, each built specifically for one data source and one AI system.

Think of it like having a different charger for every electronic device you own; you'd need dozens of cables, adapters would break regularly, and connecting everything would be inefficient and frustrating.

The M × N Problem

This fragmentation creates what engineers call the "M × N integration problem." If you have M different AI systems and N data sources, you potentially need M × N separate integrations. These numbers can get out of hand pretty quickly; consider that only three AI models connecting to four tools could require twelve separate integration projects!

Needless to say, this approach doesn't scale. Each connection represents:

- Custom development work

- Ongoing maintenance burden

- Potential points of failure

- Inconsistent data access across systems

Understanding Key Concepts

Before diving deeper, let's clarify some essential terminology:

Context: In AI, this means relevant information the model can use to understand and respond accurately. Without context, an LLM operates blindly, relying only on potentially outdated training data.

AI Agent: An AI system that not only responds to queries but can take actions, like scheduling meetings, querying databases, or sending emails. Agents need rich context to make smart decisions about what actions to take.

Integration: A connector linking an AI model to external data or functionality. Traditional integrations are custom-built for each combination of AI system and data source.

Protocol: A standardized set of rules that different software systems use to communicate consistently. Just as HTTP enables web browsers to talk to any web server, MCP enables AI systems to communicate with any data source using the same language.

Now that we've discussed these terms, it's finally time to talk about the big one: MCP!

Introducing MCP: The Universal Solution

The Model Context Protocol (MCP) solves the fragmentation problem by creating a single, standardized way for AI systems to connect with external data and tools. Using our earlier example of charging cables, think of it as "USB-C for AI," or one universal connector that works with any compatible system.

Simple Architecture, Powerful Results

MCP uses a straightforward client-server model:

MCP Client: Lives within your AI application (chat interface, coding assistant, etc.) and handles communication with external resources on behalf of the AI.

MCP Server: Acts as a standardized wrapper around each data source or tool. Whether it's Google Drive, Slack, or your internal database, the MCP server presents its capabilities through the same universal interface.

The Protocol: Defines three key interfaces:

- Tools: Actions the AI can perform (send email, query database, get weather)

- Resources: Data the AI can access (documents, records, images)

- Prompts: Pre-defined templates for specialized tasks

Capability Discovery: Smart, Dynamic Access

When an MCP client connects to a server, it automatically asks: "What can you do? What resources and tools are available?" The server responds with a standardized list of its capabilities.

This dynamic discovery means your AI can adapt to available tools in real-time, without hardcoding specific integrations. It's like an employee accessing a new system and automatically seeing what databases and functions they have permission to use.

MCP's Game-Changing Benefits

This is still pretty broad; let's go into some more detailed benefits you gain from applying MCPs to your AI workflow.

1. Dramatically Simplified Architecture

Instead of building M × N custom integrations, MCP reduces complexity to M + N. Each AI platform implements MCP client capability once, and each data source gets wrapped by one MCP server. Any MCP-compatible AI can then use any MCP server.

For example, If OpenAI's GPT, Anthropic's Claude, and your custom model all support MCP, and you have MCP servers for Slack and GitHub, any of those models can immediately use either service. No separate plugins required.

2. Consistent, Rich Context

Because everything flows through one standardized channel, AI systems maintain context better across different tools. An AI can use results from a Salesforce query and then send an email based on that data, all in one coherent workflow.

The fragmentation disappears, allowing AI agents to carry information between tools naturally and handle longer, more complex tasks.

3. Future-Proof and Vendor-Agnostic

MCP isn't tied to any specific AI model or vendor. Switch from GPT-4 to Claude tomorrow? Your integrations still work. Add a new data source? It works with all your AI systems immediately.

This prevents vendor lock-in and encourages innovation; because it's a universal connector, you can mix and match the best AI models with the best tools.

4. Enhanced Security and Control

Finally, MCP enables centralized permission management. Each server enforces authentication and access controls, ensuring AI systems only access what they're authorized to use. Because interactions are standardized, it's easier to audit what the AI accessed and did.

MCP vs. Alternative Approaches

Understanding how MCP compares to other solutions helps clarify its unique value:

Custom API Integrations: Offer complete control but require extensive custom code for each connection. MCP provides standardization while maintaining flexibility.

Plugin Systems: Work well within single platforms (like ChatGPT plugins) but create vendor lock-in. MCP is vendor-agnostic and works across any compatible AI system.

RAG (Retrieval-Augmented Generation): Excellent for static document retrieval but limited to read-only access. MCP complements RAG by adding action capabilities and broader tool integration.

Agent Frameworks: Provide comprehensive development tools but often require significant coding. MCP can serve as the integration layer for these frameworks, simplifying their implementation.

Key Takeaways

- Context is crucial for AI effectiveness: Without access to relevant, up-to-date information, even advanced models produce subpar or incorrect results. The Air Canada chatbot incident demonstrates the real-world consequences of context failures.

- Integration fragmentation limits AI potential: Traditional one-off integrations create maintenance nightmares and don't scale. The M × N problem makes connecting multiple AI systems to multiple data sources exponentially complex.

- MCP provides a universal integration standard: By standardizing how AI systems communicate with external resources, MCP transforms integration complexity from exponential to linear growth.

- Dynamic capability discovery enables smarter AI: Rather than hardcoding integrations, MCP allows AI systems to discover available tools and data in real-time, leading to more flexible and capable agents.

- The benefits extend beyond technical simplification: MCP enables richer AI responses, easier scaling, better security management, and future-proof architecture that adapts as your AI and data ecosystem evolves.

Closing Thoughts

As AI continues evolving from clever tools to integrated assistants, structured context protocols like MCP become essential infrastructure. They ensure AI systems can access the full picture rather than operating in isolation, delivering the reliable, contextually-aware performance that real-world applications demand.

Before building AI features, list all the context sources your system will need: documents, databases, APIs, user data, et cetera. Instead of hardcoding connections to each source, design a unified context interface. Even if you don't use MCP directly, thinking in terms of standardized protocols will save significant development time and create more maintainable systems.

Understand that the future of AI isn't just about smarter models; it's about connecting those models to the rich, dynamic world of data and tools they need to truly augment human capabilities.